Griptape’s release of version 0.26.0 is now available on PyPi. This update brings many new features designed for the sake of improving your development experience by simplifying configurations, extending functionality, and improving system interactions. Let’s dive into how these new tools and updates can bring efficiency and power to any of your projects.

Powering Up Audio and Real-Time Functionalities

With the introduction of the AzureOpenAiStructureConfig, you will be able to integrate Azure OpenAI services much easier. This tool acts like a central hub for all your Azure OpenAI Driver settings by streamlining the setup process and reducing complexity. Imagine setting up a new smart home system where this tool serves as the central control panel, allowing you to manage all your connections and configurations from one place.

Before introducing the AzureOpenAiStructureConfig, you would have to initialize all of the drivers you needed with the same, repetitive values as seen below.

With the new Config, you no longer need to repeat configuration, and it will initialize all base drivers for you with the Config you specify.

Furthermore, for developers working with image and audio content, the new AzureOpenAiVisionImageQueryDriver and AudioLoader are a different sort of game changer. The AzureOpenAiVisionImageQueryDriver is built to extend your application’s capabilities to understand and analyze images. It also extends support for working with OpenAI’s multi-modal models within the Azure ecosystem. Think of it like giving your app a pair of 'intelligent eyes'.

On the other hand, the AudioLoader breaks down the process of importing audio files into your system, much like having an efficient digital librarian at your disposal who organizes and prepares all audio materials for quick access and processing. The AudioLoader feature allows you to easily load audio files from your disk and transform them into Griptape Artifacts. Once loaded, these artifacts can smoothly integrate with functionalities such as the newly introduced Audio Transcription Task. This integration streamlines the process of transforming spoken words into written text, enhancing the utility and versatility of your applications. Whether you're developing voice-activated systems or looking to automate transcription workflows, this feature simplifies the initial step of accessing and utilizing audio content within your projects.

Our release also focuses on improving audio processing capabilities. The AudioTranscriptionTask and AudioTranscriptionClient work in collaboration to transform audio content into text. This is perfect for applications such as voice-activated tools or automated transcription services. It could be compared to having an assistant who takes notes for you, ensuring no piece of information is missed.

The following code example takes a downloaded audio file, transcribes, translates, and outputs new audio files with the translations. It uses the ElevenLabsTextToSpeechDriver introduced in Griptape 0.25.

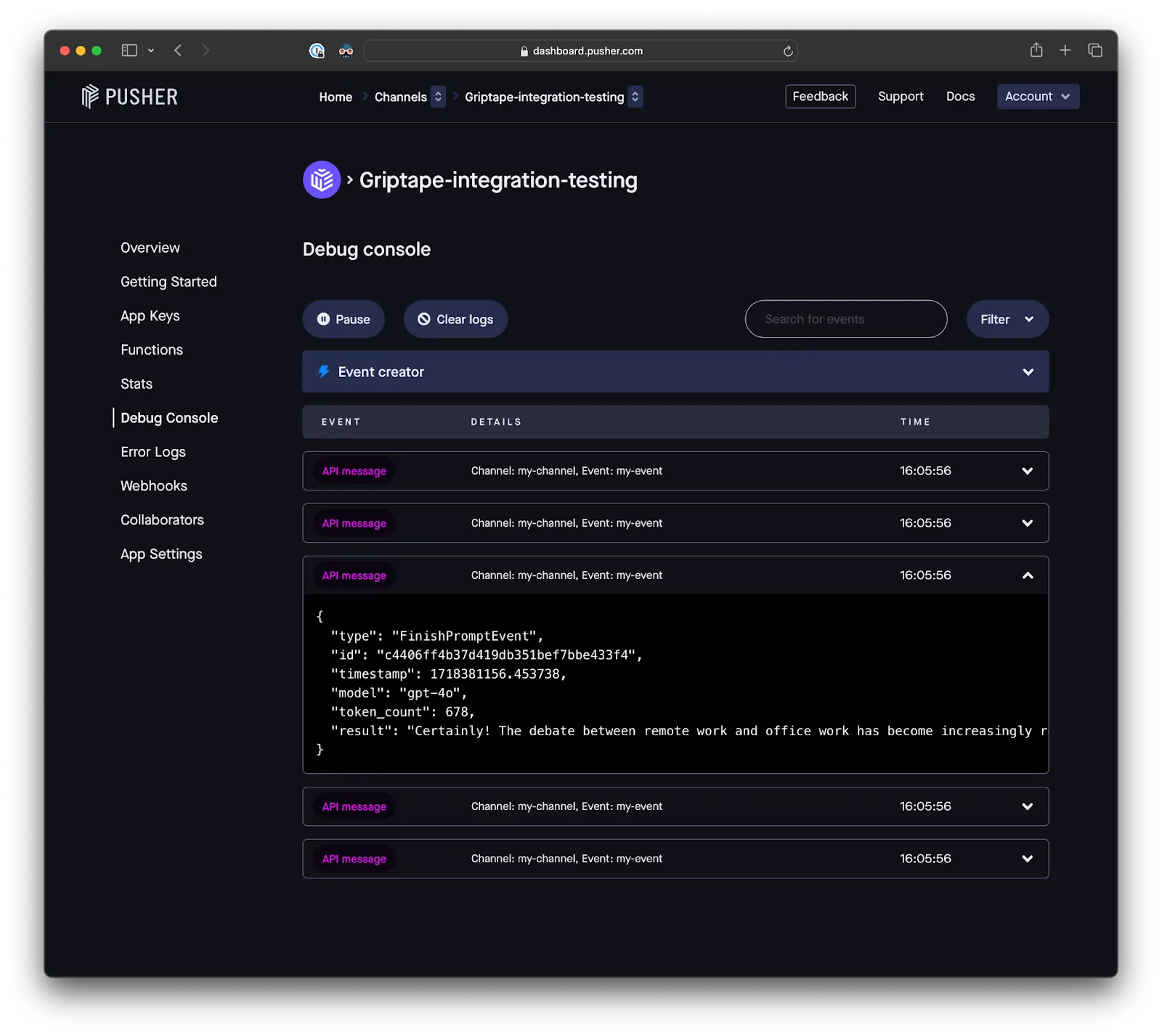

For real-time application needs, the PusherEventListenerDriver enables instantaneous communication within your projects using Pusher WebSockets. This is very important for applications that depend on real-time updates, whether that’s live sports apps or instant messaging platforms. Speed and responsiveness can be crucial in both.

Here is an example of how you would set up an Agent to respond to events pushed to it. You can see the Agent’s response to each event in the Pusher console.

Streamlining and Securing Environment Management

Finally, our update brings enhancements in managing environments with the introduction of the Parameter env to BaseStructureRunDriver. This allows for more precise control over the execution environments of your structures when using the LocalStructureRunDriver or the GriptapeCloudStructureRunDriver. Specifically, this feature allows you to define and adjust environment variables that dictate how a structure operates during runtime. By adjusting these environment variables, you can tailor the execution environment to match the specific needs of your application, ensuring it performs optimally under different conditions. This level of control is appealing for maintaining stability and efficiency, especially when deploying complex systems that must operate consistently across varied configurations.

The following code uses the env parameter in order to pass runtime configuration for a specific run of the structure.

Together, these features and improvements not only improve the robustness and capability of your applications but also clear up the management and integration of complex systems. So, whether you are building highly interactive applications, processing extensive data, or you’re integrating sophisticated AI models, Griptape version 0.26.0 is built to lift your projects to new heights. We can’t wait to see how you make it to the top.

We also want to re-introduce you to the off_prompt functionality that is configured on all Griptape tools. This feature allows developers to choose whether a tool's results are stored directly in TaskMemory or returned immediately to the Large Language Model (LLM). By setting off_prompt to True, results are saved in TaskMemory, ideal for sensitive or large data that you might want to process or analyze later. Conversely, setting it to False returns results directly to the LLM, speeding up interactions when immediate response is preferred. This flexibility offers customized control over data handling to optimize performance and security based on your application's needs.

In Griptape 0.26.0, there was an update where all Tools now default to off_prompt=False and you will be required to set off_prompt to True if you wish to opt-in to using TaskMemory. A more detailed write-up and examples can be found in the technical documentation.