The Griptape Framework (Griptape) is an open source python library to help improve the configuration and interaction of Large Language Models (LLMs). One of the powerful features Griptape offers is the ability to store information in TaskMemory, a configurable vector store, during execution instead of sending all the information back to the LLM. This is useful for many reasons. For instance, a user may not want their information shared back to the LLM for security purposes. Additionally, it can help with large content that can blow up prompt size and token counts. Using TaskMemory can also cut down on the cost of using LLMs as it is directly tied to token counts of the messages sent. If this list of benefits already seems too technical, or you’re not sure how to implement these benefits, let’s walk through a few explanations and examples.

Prompt Size and Token Count

Prompt size is also referred to as context window size in some official documentation, but what it equates to is how much data can you send in a single prompt to the LLM to generate a response. LLMs are found to offer more reliable and consistent responses when they are seeded with examples of what information you are looking for or rules for them to follow when responding. It is also a common use case for retrieval augmented generation (RAG) to send data sources you wish the LLM to search through in order to find the correct answer. This can be useful, for example, if the information is after the data cutoff date for a model, or if the data you wish to search is not available on the public web. All of these inputs count towards the prompt size, and while the maximum prompt size is growing with new models, it continues to be one of the most limiting factors for fetching useful and consistent results from the LLM.

Tokens are the very basic units of data that a LLM uses for both input and output. Tokens can be individual words, but more commonly they are collections of characters, words, and punctuation that the LLM uses to find and store relationships in data. For an example of how quickly token count can grow, we can look at the Legend of Zelda fandom wiki. This page has roughly 67k words (at the time of writing this), which an LLM would tokenize into roughly 100k tokens for GPT-4o. If you wanted to provide multiple sources as well as some rules to help guide the LLM to a consistent answer, you may already see how the token count can quickly outnumber even GPT-4o’s large input token limit of 128k.

A Basic Griptape Agent

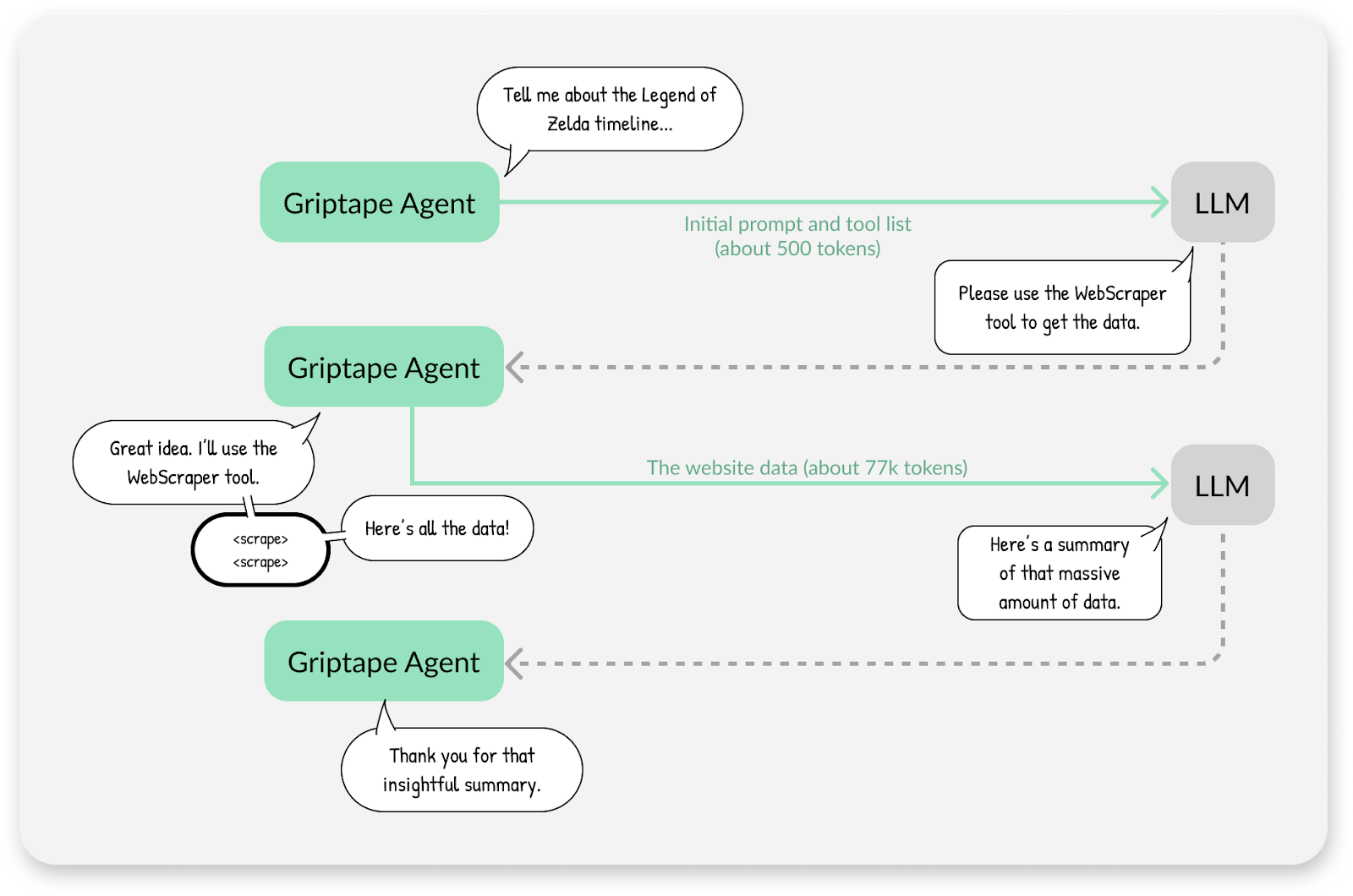

Taking a step back from how LLMs work to focus on how the Griptape Framework works and how it can help with problems like blowing up the prompt size. Griptape provides structures such as agents and workflows for task organization, as well as a collection of pre-built tools to help with accomplishing the tasks you request. When creating an agent, you can specify a set of tools that the agent has at its disposal. When running an agent with an initial prompt, the agent will send the prompt and tools to the LLM which will in turn send instructions back to the agent on how to use the tools to accomplish the task. This loop continues until the LLM is satisfied that it has enough information to respond to the initial prompt.

Let’s go back to our Legend of Zelda fandom article example. We can use Griptape’s WebScraper tool to scrape the site for information to answer the initial prompt about the timelines in the Zelda franchise.

As you can see, the LLM is only hit twice with this logic, but the second call contains the entire contents of the website, about 77k tokens, in order for the LLM to parse through the information and find the answer to the initial prompt. This logic is what has the potential to blow up the prompt size and one of the problems Griptape’s TaskMemory can help with.

Griptape TaskMemory

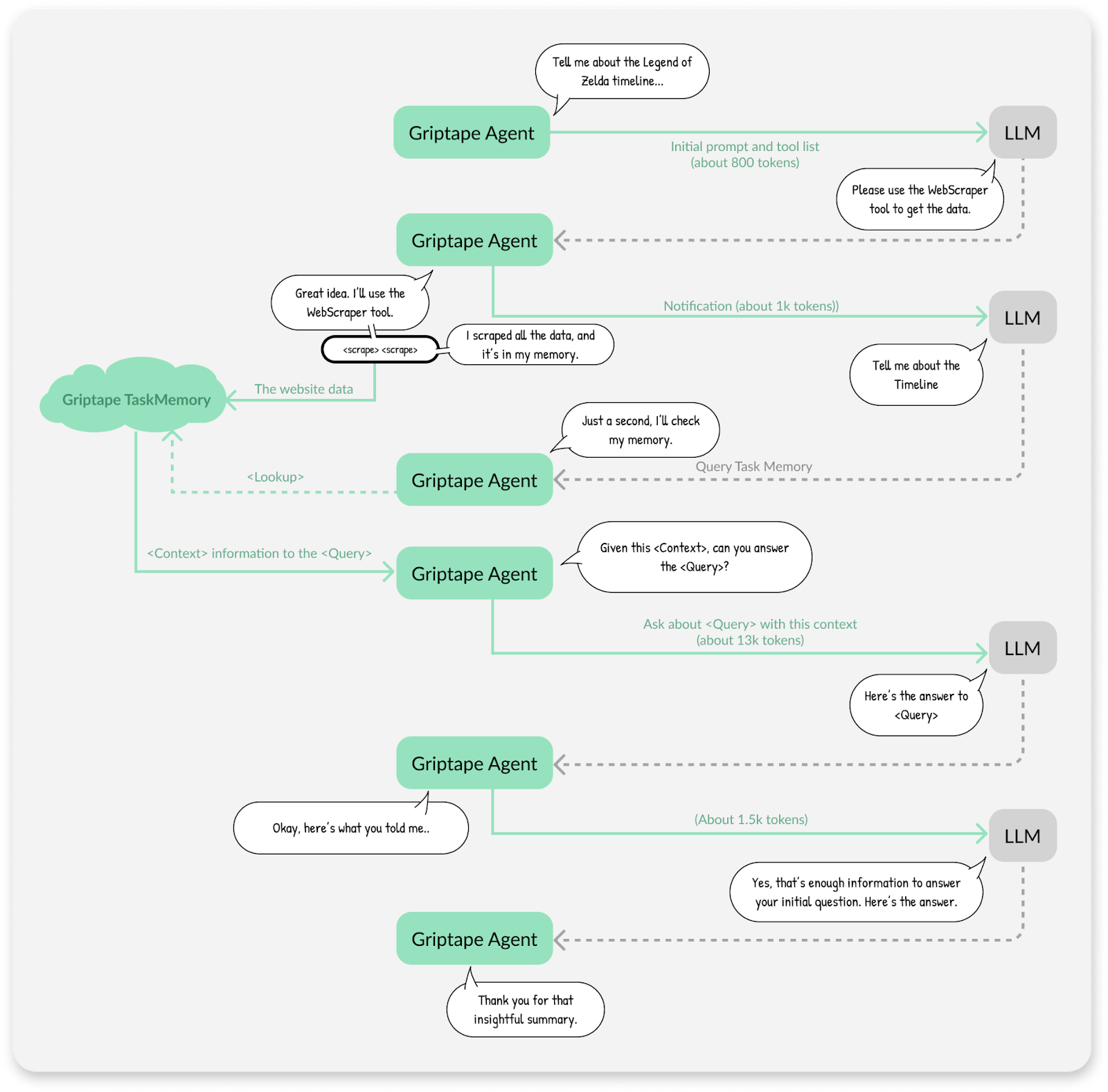

When specifying tools, you can choose if you’d like the output of the tool to go directly back to the LLM (default behavior) or get stored in an object called TaskMemory. Enabling TaskMemory is on a per-tool basis via the field off_prompt and allows for the developer to have more fine-grained control over what information goes back to the LLM. When using TaskMemory, the agent still treats the LLM as an orchestrator and waits for instructions based on the tools specified. However, instead of sending all the data back to the LLM, it is stored in an intermediate vector store and only a reference to that storage is sent back to the LLM.

If that didn’t make much sense, let’s look at another example, this time setting off_prompt to True in order to direct all the outputs from the WebScraper tool to use TaskMemory. This also requires a Tool that is able to read from TaskMemory. For this example we will use the QueryTool, but Griptape offers many Tool options to best fit your needs. We want the output from the QueryTool to go back to the LLM, so we will leave off_prompt at its default value of False.

You can see that this call graph is much more complex with TaskMemory. The agent still relies on the LLM to tell it what to query for in TaskMemory, as well as to still find the answer in the context data it retrieves. You may also notice there are double the amount of calls to the LLM, but each of these calls has a fraction of the token count than our original code that didn’t use TaskMemory. This results in the cost of these four calls to the LLM is roughly ¼ of the cost of the two calls from the previous example.

Lastly, you may also notice that there is a seemingly duplicate call at the end of the call stack where the agent immediately re-sends the answer to the query back to the LLM for the LLM to construct a final response. This secondary back-and-forth is required for the LLM to understand that it now has enough information to answer the initial prompt and send back the information. While it seems superfluous in this context, we’ll soon explore an example where it makes more sense.

Under the Hood of TaskMemory

Before we move on, let’s talk a little bit about TaskMemory’s internal workings. When TaskMemory stores and queries data, it uses an embedding model to generate embeddings for ease of searching. An embedding model is a type of natural language processing (NLP) model that creates a numerical vector representation of the words it sees. They differ from LLMs in that they are not generative and do not take context into account when processing data.

Using Multiple Models

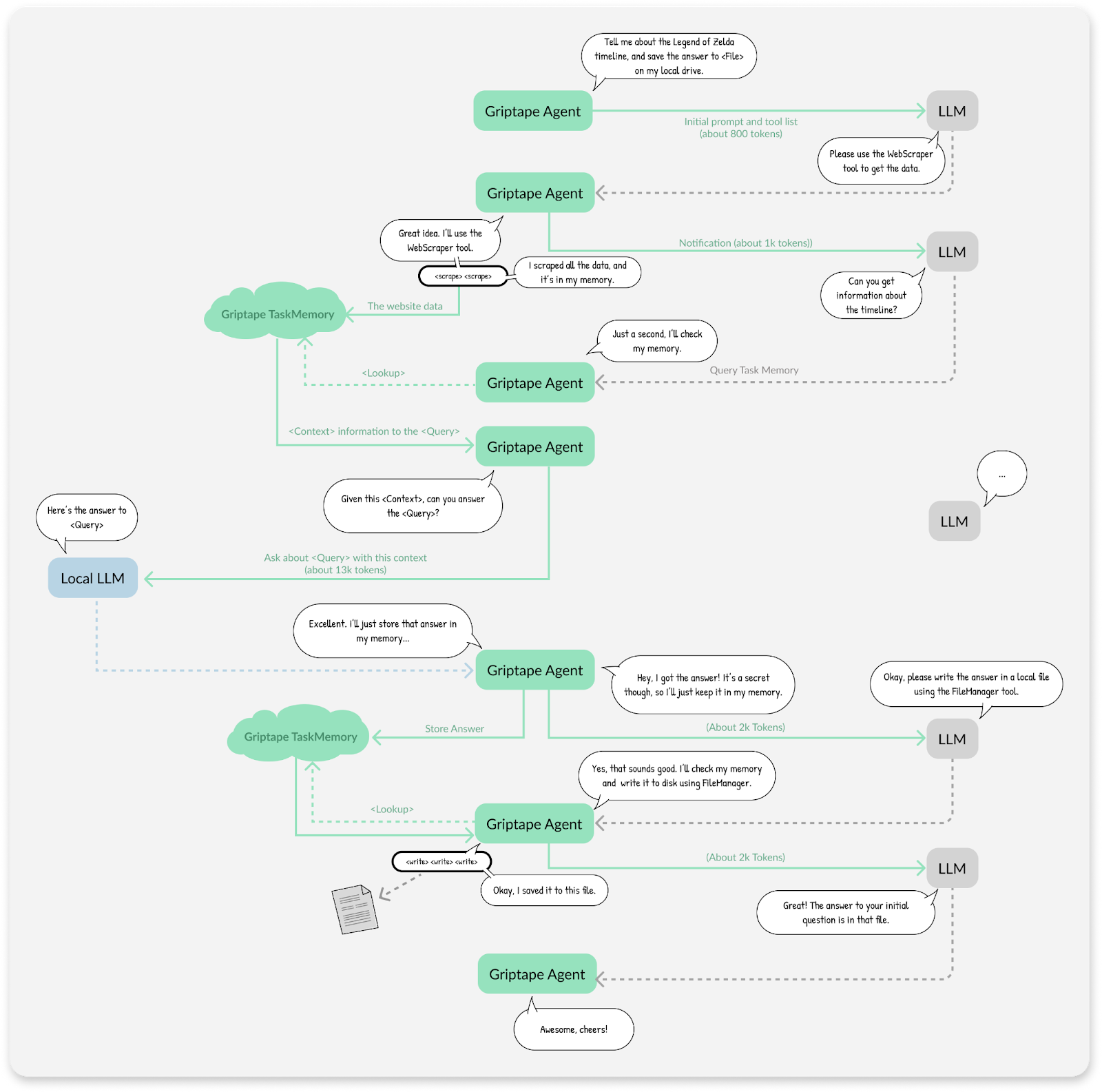

Your first question may be “Why would we want to use multiple models?” There can be many reasons, but data security and cost are two of the driving factors. In our previous example, while we were able to cut down on the token count while using TaskMemory, the contents of the website scraped still made it back to the 3P LLM in the form of context data from the TaskMemory lookup. This was also the largest call at ~13k tokens. Now let’s say instead of scraping a public-facing wiki page, you are instead scraping an internal wiki of company data. You may not want that data going back to a 3P LLM in any form. This is where using a second model to field that data comes in handy as it could be a locally hosted model that is internal to your company or it could simply be a cheaper model to offload the most expensive call.

Let’s take a look at an example where we are still using the same prompt, but this time, we are directing the QueryTool to use a secondary model when handling where the output data is stored. This example uses Amazon’s Titan model, but this model could be a local model as well. We are also passing one more tool, the FileManagerTool, in order to completely keep the data from the 3P LLM. This change also requires us to direct the LLM to save a file in our initial prompt.

You may notice that this call stack is largely the same, with the key difference being that the call to find the answer in the scraped data is now offloaded to the second LLM instead of the 3P one. While there are still four calls to the 3P LLM, none of them go above 2k tokens nor contain any of the data from the scraped website, which results in improved data security for whatever data you are interested in processing as well as ½ the cost.

Note: As mentioned previously, TaskMemory hits an embedding model to store and search its data. If you wish to keep company data out of a 3P embedding model, you will need to host one.

Wrapping Up

Hopefully this has helped you gain an understanding of the Griptape Framework and its unique feature of keeping data “off the prompt” using TaskMemory. For more code examples of how to use and configure TaskMemory see the technical documentation. For more detailed walkthroughs on building applications using Griptape, you can check out the Griptape Trade School for courses and full source code. If you are looking for a place to host your python code for your production applications, you can sign up for a free account with Griptape Cloud.

.webp)